Intro

This is part 2 of my series on using the Uno Platform to write a cross-platform app, able to target both single and dual-screen devices. In this post I cover the infrastructure used to collate and aggregate the data used by COduo as a prelude to a deeper dive into the implementation of the app itself which I will cover in later posts.

Here are links to all the posts I have written - or intend to write - for this series:

- Part 1 - Background

- Part 2 - Infrastructure

- Part 3 - Client Architecture

- Part 4 - Using the TwoPaneView

- Part 5 - Implementing the interactive UK Map

- Part 6 - Charts on the Uno Platform

- Part 7 - Windows, Win10X and releasing to the Microsoft Store

- Part 8 - Android and releasing to the Google Play Store

- Part 9 - iOS and releasing to the Apple App Store

Infrastructure

While considering how to implement COduo, I needed to ensure the app could retrieve all the data it required quickly, efficiently, securely and - most importantly - cheaply. As such, I decided to introduce service infrastructure that would perform all the required data collation, aggregation and serialization such that the app merely had to retrieve a single file from a know URI.

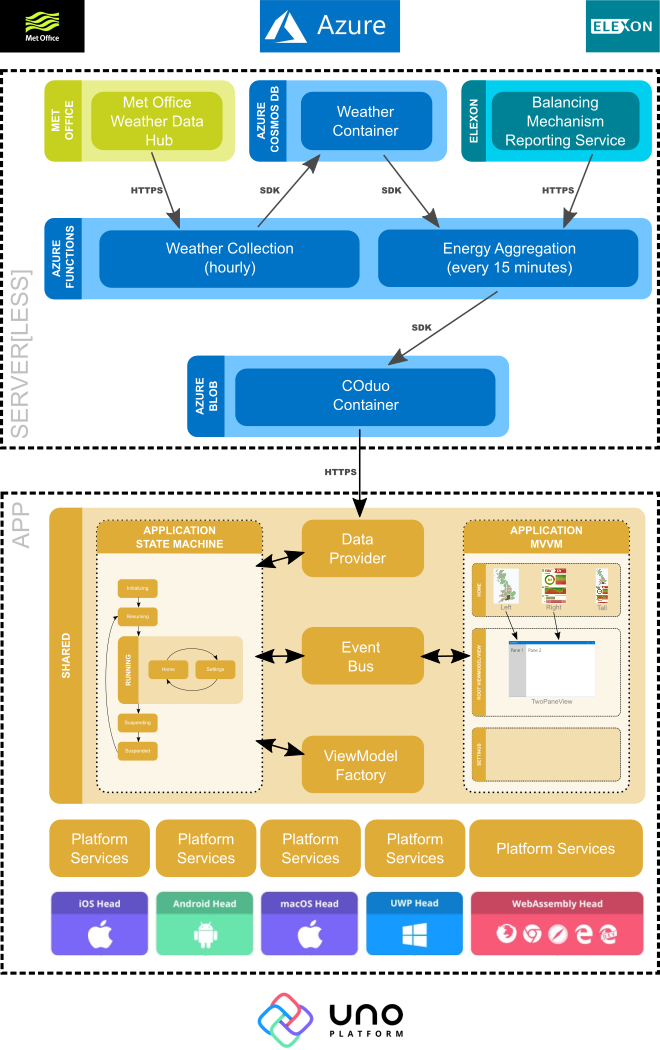

Here is a mile-high view of the infrastructure used to operate COduo and the architecture of the app's various components:

As the primary focus of this series of posts is the Uno Platform I won't be digging into the service-side components too deeply but I feel it's important to show how the infrastructure delivers on the requirements above in order to understand how this simplifies the app's implementation.

Server[less]

Fundamentally, the infrastructure is provided by two, timer-triggered Azure Functions: "Weather Collection" and "Energy Aggregation". These 'serverless' functions collate, process and store all the data required by the app, greatly simplifying client data access.

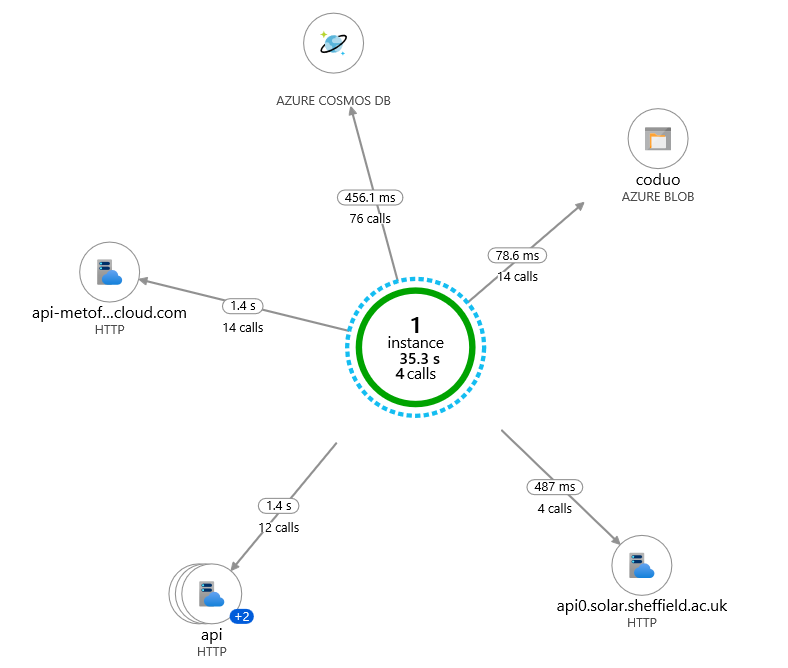

Here's the (Application Insights generated) application map:

Weather Collection

[FunctionName("WeatherV1")]

public static async Task Weather(

[TimerTrigger(WeatherNormal)] TimerInfo timer,

[CosmosDB(databaseName: CosmosDatabase, collectionName: WeatherCollection, ConnectionStringSetting = CosmosConnectionStringKey)] IAsyncCollector<Weather.Common.Document> documentsOut,

ILogger log)

The Weather Collection function is triggered every hour and retrieves data from the Met Office Weather Data Hub. It collects 48 hours worth of forecast data for each of 14 locations around the UK (one city in each of the 14 Distributed Network Operator regions) then transposes this to generate weather data for each hour containing the forecast in each region.

This was done for many reasons but mostly to provide numerous small, easily indexed documents that can be cheaply written to, read from and updated within Cosmos DB. This has worked well and each hour documents are saved to a free tier CosmosDB container.

Persisting these documents does occasionally exceed the free tier's 400ru/s quota which means writes to Cosmos need to be retried until they succeed. While all the retries are transparently handled by the SDK, the retries cause the function to run longer than it otherwise would and, as such, I will probably modify the function to only persist 24 hours worth of forecast data in the next version.

Energy Aggregator

[FunctionName("EnergyV1")]

public static async Task Energy(

[TimerTrigger(EnergyNormal)]TimerInfo timer,

[CosmosDB(

databaseName: CosmosDatabase,

collectionName: WeatherCollection,

ConnectionStringSetting = CosmosConnectionStringKey)] DocumentClient client,

[Blob(EnergyOutputFile, FileAccess.Write, Connection = EnergyStorage)] Stream blob,

ILogger log)

The Energy Aggregation function runs every 15 minutes and requests electricity generation and composition information from a few different API's, most notably Elexon's Balancing Mechanism Reporting Service. This is collated with weather data generated by the Weather Collection function then aggregated and serialized into a JSON document easily consumed by the COduo client application.

The serialized document is then persisted in a publicly accessible 'Hot' Azure Blob meaning the client application can retrieve it with a single, unauthenticated HTTPS request.

Conclusion

At current levels (and using the CosmosDB free-tier) it is currently costing less than £1 per month to run this infrastructure with only small increases (due to bandwidth costs) as application usage scales. As such, I feel it satisfies COduo's requirements very neatly. Furthermore, Visual Studio's impressive tooling for developing and testing Azure Functions locally (including local emulators of all storage) streamlines the delivery of features and regression testing of changes such that I've been able to iterate on this project extremely quickly.

More information

I've deliberately kept this post at a "mile-high" level as the series is focused on the use of the Uno Platform to deliver a cross platform application. However, if you're keen to understand more of how these service-side components operate then drop me a line (contact links at the bottom of the page) and, if enough people are interested, I'll write a blog post detailing these approaches further.

Part 3

Part 3 will examine the architecture of COduo with an aim to providing an understanding of how it's primary components interoperate to provide a robust and testable experience across multiple platforms and dual-screens.

Finally

I hope you enjoy this series and that it goes some way to demonstrating the massive potential presented by the Uno Platform for delivering cross-platform experiences without having to invest in additional staff training nor bifurcating your development efforts.

If you or your company are interested in building apps that can leverage the dual screen capabilities of new devices such as the Surface Duo and Surface Neo, or are keen to understand how a single code-base can deliver apps to every platform from mobile phones to web sites, then please feel free to drop me a line using any of the links below or from my about page. I am actively seeking new clients in this space and would be happy to discuss any ideas you have or projects you're planning.